Now that we know what Open WebUI is, let’s get it setup on your computer.

Running Open WebUI requires 3 different softwares which are:

- Docker: Free software that makes it easy to install and run other software, including Ollama and Open WebUI.

- Open WebUI: Free software that makes it easy to interact AI models privately and securely on your own computer.

- Ollama: Free software that makes it easy to download AI models to run locally on your computer using Open WebUI.

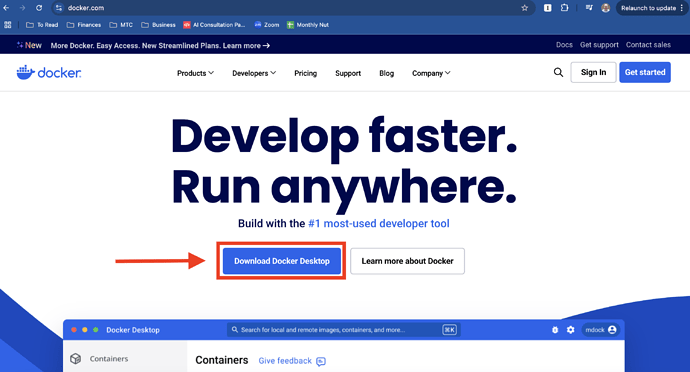

Step 1: Download Docker Desktop

Goto Docker.com and click on the download Docker button:

Go through the free signup process.

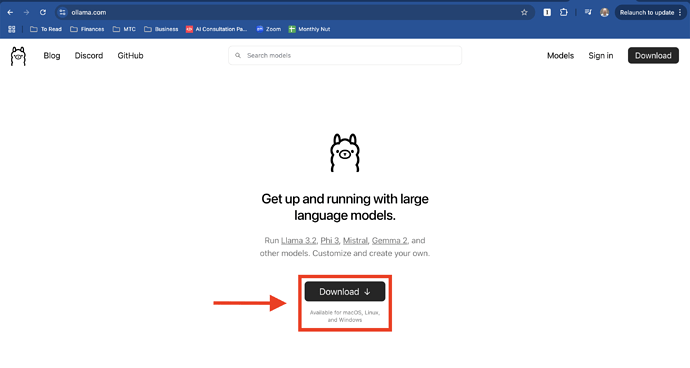

Step 2: Download Ollama

Goto Ollama.com and click the download button:

Step 3: Install Open WebUI

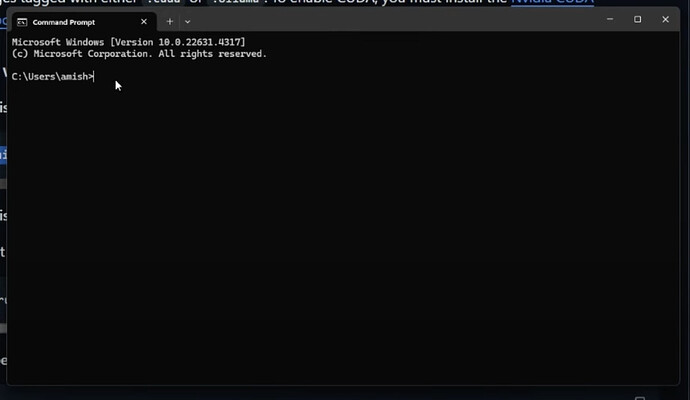

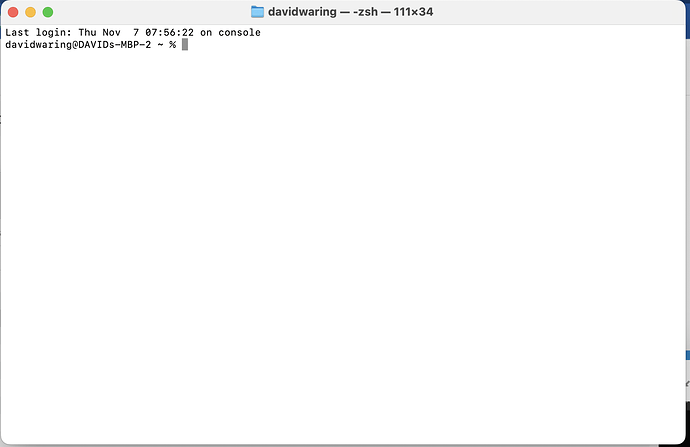

Open the command prompt (Windows) or terminal (Mac) on your computer.

You’ll find this program in the same place as all the other applications on your computer.

If you are on Windows it looks like this:

And if you are on Mac it looks like this:

Once you have this window open, copy and past the following into the window, and press enter:

docker run -d -p 3000:8080 --add-host=host.docker.internal:host-gateway -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:main

Step 4: Open Your Web Browser and type in: localhost:3000

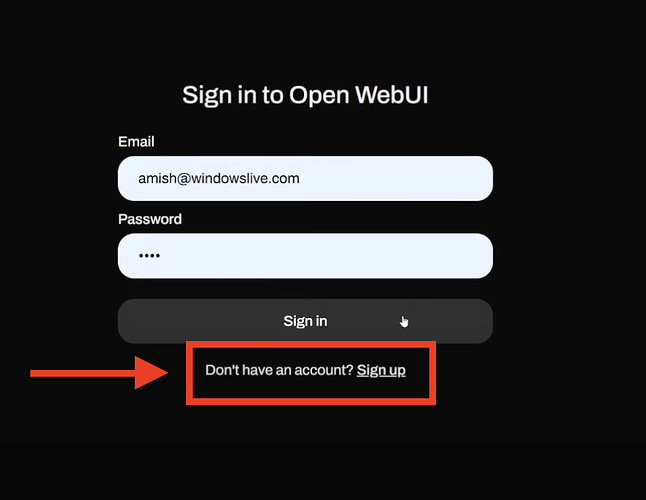

This will bring up the Open WebUI signin page.

You need to sign up for a login. This information is stored locally on your computer, and not sent outside of it.

Step 5: Download Your First Model

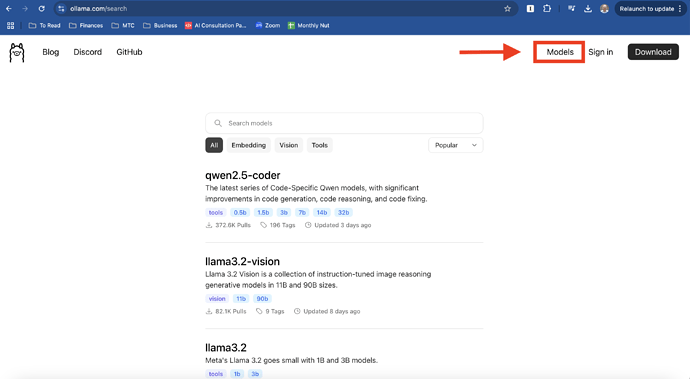

Goto Ollama.com, click on the models link to bring up all available AI models:

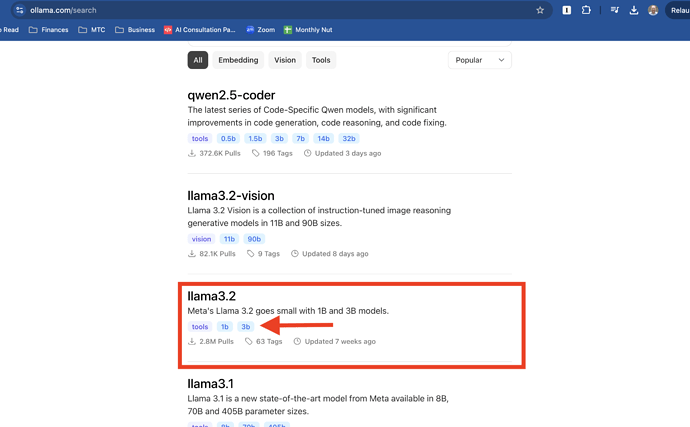

We recommend starting with the llama3.2 3B parameter model. Llama 3.2 is the most popular open source AI model. The 3B parameter version is small enough to run on most computers.

To do this click on 3b under llama3.2:

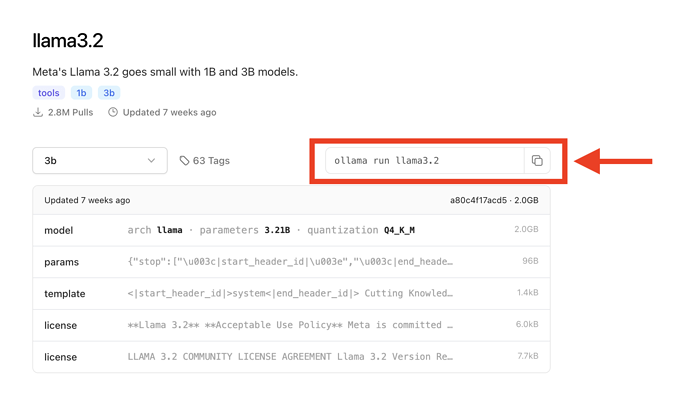

This will bring up the model page, where you can copy the run code.

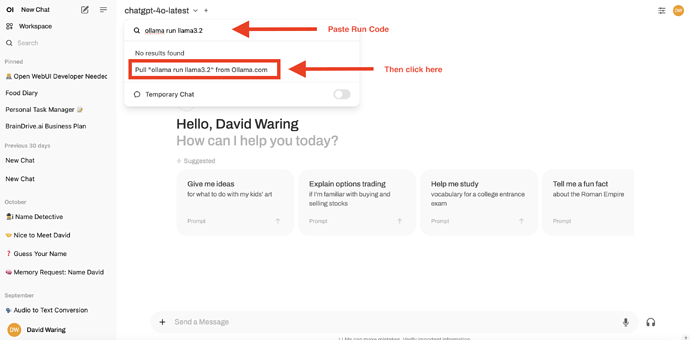

Once you have copied the run code, go back to your Open WebUI (localhost:3000), goto workspace. Then past the llama3.2 3b run code you copied from Ollama, and pull the model.

This will download the model onto your computer.

You’ve now downloaded your first model! Disconnect from the internet, navigate back to localhost:3000, and run your first private AI model!

Next Steps

Continue to our next lesson where we walk you through Open WebUI’s features and functionality.